EnOcean is an energy harvesting wireless technology used primarily in building automation systems and smart homes. All modules based on this technology combine on the one hand micro energy converters with ultra low power electronics, and on the other hand enable wireless communications between battery-less wireless sensors, actors and even gateways. The communication is based on so called ‘EnOcean telegram’. Since 2012 the EnOcean standard is specified as the international standard ISO/IEC 14543-3-10.

The EnOcean Alliance is an association of several companies to develop and promote  self-powered wireless monitoring and control systems for buildings by formalizing the interoperable wireless standard. On their website the alliance offers some of their technical specifications for everybody.

self-powered wireless monitoring and control systems for buildings by formalizing the interoperable wireless standard. On their website the alliance offers some of their technical specifications for everybody.

To send an EnOcean telegram you need a piece of hardware connected to your host, e.g. an EnOcean USB300 USB Stick for your personal computer or an EnOcean Pi SoC-Gateway TRX 8051 for your Raspberry Pi. In this sample we use the USB300 to send a telegram using a small piece of software implemented in Java. The following photography shows an USB300 stick:

EnOcean USB300 Stick

The EnOcean radio protocol (ERP) is optimised to transmit information using extremely little power generated e.g. by piezo elements. The information sent between two devices is called EnOcean telegram. Depending on the EnOcean telegram type and the function of the device the payload is defined in EnOcean Equipment Profiles (EEP). The technical properties of a device define three profile elements:

- The ERP radio telegram type: RORG (range: 00…FF, 8 Bit)

- Basic functionality of the data content: FUNC (range 00…3F, 6 Bit)

- Type of device in its individual characteristics: TYPE (range 00…7F, 7 Bit)

Since version 2.5 of EEP the various Radio-Telegram types are grouped ORGanisationally:

| Telegram | RORG | Description |

| RPS | F6 | Repeated Switch Communication |

| 1BS | D5 | 1 Byte Communication |

| 4BS | A5 | 4 Byte Communication |

| VLD | D2 | Variable Length Data |

| MSC | D1 | Manufacturer Specific Communication |

| ADT | A6 | Addressing Destination Telegram |

| SM_LRN_REQ | C6 | Smart Ack Learn Request |

| SM_LRN_ANS | C7 | Smart Ack Learn Answer |

| SM_REC | A7 | Smart Ack Reclaim |

| SYS_EX | C5 | Remote Management |

| SEC | 30 | Secure Telegram |

| SEC_ENCAPS | 31 | Secure Telegram with RORG encapsulation |

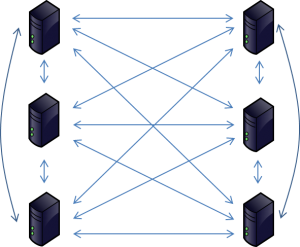

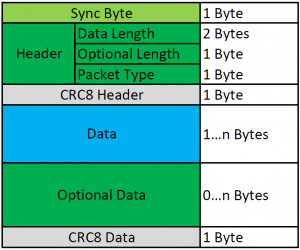

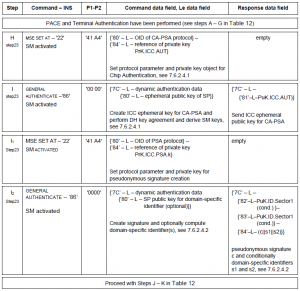

In this context we use the type VLD (Variable Length Data) to have a closer look to EnOcean telegrams. VLD telegrams can carry a variable payload of data. The following graphic shows the structure of on EnOcean telegram (based on EnOcean Serial Protocol 3, short: ESP3):

Structure of EnOcean telegram

ESP3 is a point-to-point protocol with a packet data structure. Every packet (or frame) consists of header, data and optional data. As you can see in the structure, the length of the complete telegram is encoded in the header with two bytes. This suggests a maximum telegram length of 65535 bytes. Unfortunately, the maximum length of such a telegram is reduced to 21 bytes (data) due to limitations of low power electronics. Reduced by overhead information wasted in field data, the resulting net payload has finally a size of 14 Bytes. The following code snippet demonstrates how to send a telegram with 14 bytes payload ’00 11 22 33 44 55 66 77 88 99 AA BB CC DD’. At first we have look at the telegram:

| Telegram: |

55 00 14 07 01 65 D2 00 11 22 33 44 55 66 77 88 99 AA BB CC DD 00 00 00 00 00 01 FF FF FF FF 44 00 0B |

| Sync. byte: |

55 |

| Header: |

00 14 07 01 |

| CRC8 Header |

65 |

| Length data: |

20 (0x14) |

| Length optional data: |

7 (0x07) |

| Packet Type: |

01 |

| Data: |

D2 00 11 22 33 44 55 66 77 88 99 AA BB CC DD 00 00 00 00 00 |

| RORG: |

D2 |

| ID: |

00 00 00 00 |

| Status: |

00 |

| Data Payload: |

00 11 22 33 44 55 66 77 88 99 AA BB CC DD |

| Optional data: |

01 FF FF FF FF 44 00 |

| SubTelNumber: |

01 |

| Destination ID: |

FF FF FF FF |

| Security: |

00 |

| Dbm: |

68 (0x44) |

| CRC8 Data |

0B |

The following Java code demonstrates one way to send this telegram via USB300. The code snippet uses the library of RXTX to access the serial port.

import java.io.OutputStream;

import gnu.io.CommPort;

import gnu.io.CommPortIdentifier;

import gnu.io.SerialPort;

public class EnOceanSample {

static SerialPort serialPort;

static String serialPortName = "COM3";

public static void main(String[] args) {

byte[] sampleTelegram = new byte[] { (byte) 0x55, (byte) 0x00, (byte) 0x14, (byte) 0x07, (byte) 0x01, (byte) 0x65,

(byte) 0xD2, (byte) 0x00, (byte) 0x11, (byte) 0x22, (byte) 0x33, (byte) 0x44, (byte) 0x55, (byte) 0x66, (byte) 0x77, (byte) 0x88, (byte) 0x99, (byte) 0xAA, (byte) 0xBB, (byte) 0xCC, (byte) 0xDD,

(byte) 0x00, (byte) 0x00, (byte) 0x00, (byte) 0x00, (byte) 0x00, (byte) 0x01, (byte) 0xFF, (byte) 0xFF, (byte) 0xFF, (byte) 0xFF, (byte) 0x44, (byte) 0x00, (byte) 0x0B};

try {

CommPortIdentifier portIdentifier = CommPortIdentifier

.getPortIdentifier(serialPortName);

if (portIdentifier.isCurrentlyOwned()) {

System.err.println("Port is currently in use!");

} else {

CommPort commPort = portIdentifier.open("EnOceanSample", 3000);

if (commPort instanceof SerialPort) {

serialPort = (SerialPort) commPort;

// settings for EnOcean:

serialPort.setSerialPortParams(57600, SerialPort.DATABITS_8,

SerialPort.STOPBITS_1, SerialPort.PARITY_NONE);

System.out.println("Sending Telegram...");

OutputStream outputStream = serialPort.getOutputStream();

outputStream.write(sampleTelegram);

outputStream.flush();

outputStream.close();

serialPort.close();

System.out.println("Telegram sent");

} else {

System.err.println("Only serial ports are handled!");

}

}

}

catch (Exception ex) {

}

}

}

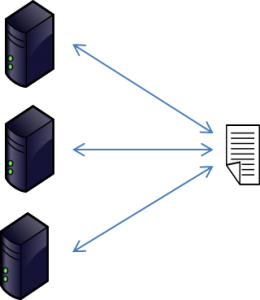

On this way it’s not possible to send telegrams with a huge payload. If the information to be sent is longer than the described limit above, you can use a mechanism called ‘chaining’. To chain telegram a special sequence of telegrams is necessary. All protocol steps for chaining are specified in EO3000I_API.

Attention: In Europe EnOcean products are using the frequency 868,3 MHz. This frequency can be used by everybody for free but the traffic is limited, e.g. in Germany where it’s only allowed to send 36 seconds within one hour.

In one of my last blog posts I gave you the know how to receive EnOcean telegrams. Now, based on the information above, you can send your own EnOcean telegram in context of your Smart Home or your IoT environment.