Motivation

Typically protocols are connecting two different systems. In an open system with several stakeholders interoperability between these systems is a fundamental requirement. To assure this interoperability there are various way. In this blog post I present you two popular approaches in cooperation with my colleague Dr. Guido Frank who works at the German Federal Office for Information Security (BSI).

Interoperability

Interoperability is a characteristic of a product or system, whose interfaces are completely understood, to work with other products or systems, present or future, in either implementation or access, without any restrictions (Source: Wikipedia).

From a system perspective, this means that all implementations need to comply to the same technical specifications. Interoperability is essential because these systems are open systems with different stakeholders. It refers to the collaboration ability of cross-system protocols.

Crossover Testing vs. Conformity Testing

To ensure interoperability, implementations need to be tested. In general, there are different approaches to test systems or implementations.

Crossover Testing

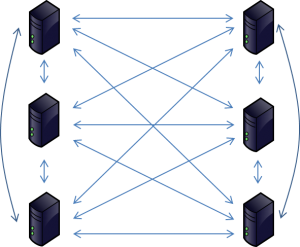

The scope of a crossover test is to test every system component with all other system components. This procedure allows to detect incompatibilities between existing implementations with a fixed release status.

The efforts to perform this kind of test increases disproportionately with every additional instance of the system. Therefore these kinds of tests can practically be performed only with a small number of involved test partners. The following figure illustrates the interaction between different systems in a crossover test.

Another problem of crossover testing is maintenance: every new implementation or any new version of existing implementations must be tested with every corresponding system, which again increases the testing efforts significantly. A benefit of crossover testing can be found in the early phase of developing when crossover testing helps the developer to implement their own system and can be used as an indicator to use the right way. On the other side, this kind of testing only indicates a positive test case with two correct systems to test (“smoke test”). The behaviour of the systems in bad cases is not tested. Additionally, with two different systems to be tested in a crossover test it’s difficult to decide which system behaves correctly in case of a failure and which implementation has to be changed.

Conformity Testing

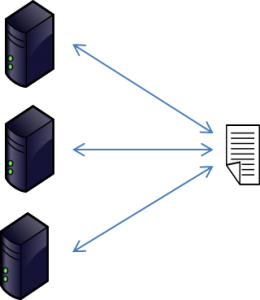

The purpose of conformity tests is to verify that a system implements the specifications correctly (i.e. it “conforms” to the specifications). These specifications need to be defined by stakeholders and finally implemented e.g. by test labs to run their conformity test tools.

This way these test suites verify the implementation under test with protocol data units which mimic both “correct” and “incorrect” behaviour of the system. The figure below illustrates the interactions between the test suite implementation and the system in a conformity test. The test definitions have to be tested with regard to harmonised interpretation among test participants.

Conclusion

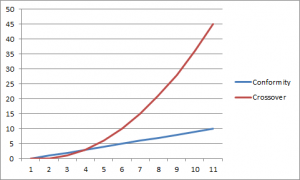

Both approaches of testing allow assuring interoperability to other systems components to a certain extent. But with increasing complexity of the systems to be tested and the increasing number of systems at all the crossover testing is getting more and more extensive. Only conformity testing scales well with the complexity and the number of systems in an adequate way. The following diagram illustrates the increasing efforts of crossover tests in relation to conformity test.

Direct tests between a subset of implementations are be useful during implementation or integration phase of a node. Such tests could also be performed via bilateral appointment between different stakeholders, e.g. via pilots. Experience from such test could also be used as additional input for conformity tests. Crossover testing or a central coordination of such tests would not be necessary for this purpose. As soon as there are several system components to be tested, conformity testing should be chosen.

The benefits of such an approach of interoperability testing can also be seen in several so called ‘InterOp tests‘ that have been performed for more than a decade in context of eMRTD. Detailed failure analysis allows to improve the stability of the whole eMRTD system. Additionally, the results of ‘InterOp tests’ helps not only to improve the stability of ePassports and inspection systems but also to improve the quality of (test) specifications and test tools.

Another open system with several vendors is banking. All cash cards or credit cards must fulfill international test specifications. This way of interoperability testing allows customers to use their cards worldwide at cash machines of various banks.

To assure systematic interoperability, it is necessary to set up conformity test specifications that systematically test the requirements as defined in the technical specifications. The tests should not only define good cases but also bad cases that mirror the pitfalls typically occurring in a system. Only this way allows to assure real system-wide interoperability.

Setup of test specification

Important components of a conformance test specifications are:

- Description of general test requirements

- Test setup / Testing environment

- Definition of suitable test profiles / implementation profiles

- Implementation Conformance Statement (ICS)

- Definition of testing or configuration data

- Definition of test cases according to a unified data structure

- Each test case should concentrate on a single feature to be tested

The following structure of a test specification has been established since the beginning of eMRTD testing in 2005. It is based on the ISO/OSI layer model where data is tested on layer 7 and protocols are tested on layer 6.

Typical structure of a test case in this context:

- Test case ID: unique identifier for each test case

- Purpose: objective of the test case

- Version: current version of this test case independent from the test specification

- Reference: where is this feature / behaviour specified

- Preconditions: setup of test case

- Test scenario: description of test case, step by step

- Postconditions: setdown of test case

Test cases can be combined in test suites to cluster test cases of similar topics or objectives. As the test specifications need to be implemented in suitable testing tools, it is useful to define the test cases already in a way, that eases their implementation, e.g. via XML using a suitable XML scheme.