A few days ago German BSI has released a maintenance release of TR-03105 Part 3.2. Primarily, the new version 1.5.1 includes editorial changes. Tests of this specification are focusing on eMRTD and the data groups and protocols used there.

What’s new in TR-03105 Part 3.2 Version 1.5.1?

- Reference documentation: Re-arranged documents of the same type are now in direct neighbourhood to make them easier to find

- Reference documentation: Added ICAO Doc9303 Part 9 and ICAO Doc9303 Part 12

- Added a new profile “Master File” for eMRTD supporting a Master File as root directory

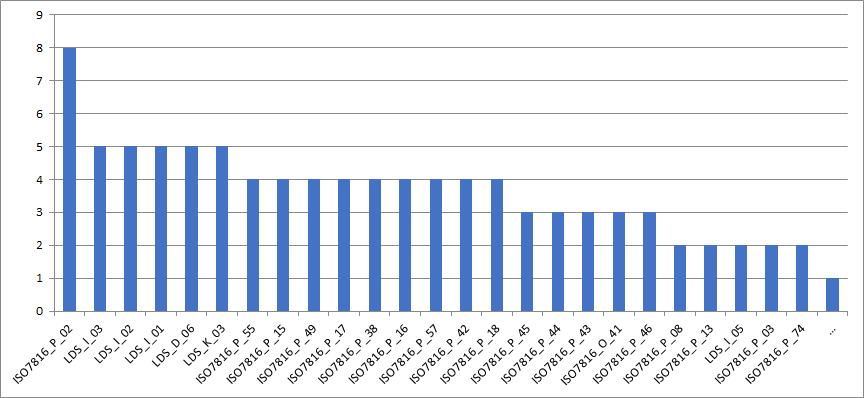

- Unit ISO7816_J: Clarification, that all test cases of this test unit which require the “Open ePassport Application” procedure must be performed twice (one test run with BAC and one with PACE) if the chip supports both protocols. If the chip only supports one of these protocols (BAC or PACE), only one test run has to be performed with the supported protocol used in the “Open ePassport Application” procedure

- Test case ISO7816_J_12: Clarification in expected result (step 1)

- Test case ISO7816_L_13: Added new profile “MF”

- Test case ISO7816_L_14: Clarification in purpose

- Test case ISO7816_L_15: Clarification in purpose

- Test case ISO7816_L_16: Clarification in purpose

- Some minor editorial changes

The one and only technical change in this version is test case ISO7816_L_13 where the new specified profile “MF” is added. This profile assures that this test case is only performed if the chip supports a Master File. Why is this profile needed? In the field there are several eMRTD without a MF. In case of PACE you need a MF to store files like EF.CadAccess or EF.ATR/INFO. But in the case of BAC a MF is not necessary. In clause 4 of ICAO Doc9303 Part 9 (7th edition) you can find the information “An optional master file (MF) may be the root of the file system” and further on “The need for a master file is determined by the choice of operating systems and optional access conditions”.

Characteristics of smart card file systems

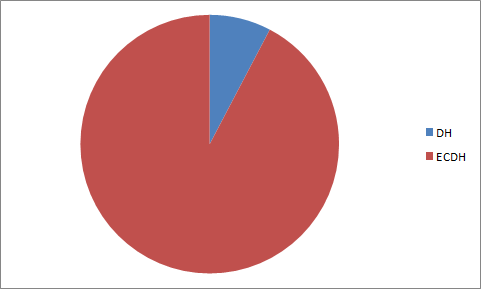

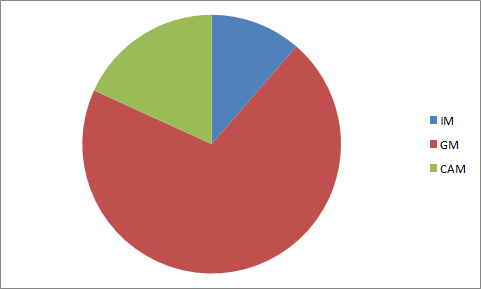

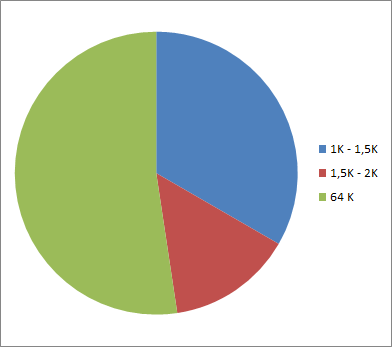

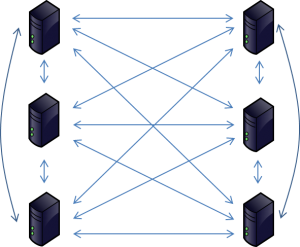

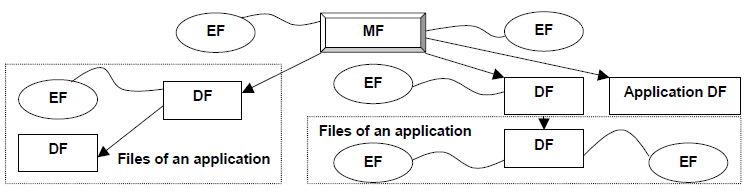

All information of an eMRTD is stored in a file system defined in ISO 7816-4. A usual file system is organized hierarchically into dedicated files (DFs) and elementary files (EFs). Dedicated files can contain elementary files or other dedicated files. Applications are special variants of dedicated files (Application DF) and have to be selected by their DF name indicating the application identifier (AID). After the selection of an application, the file within this application can be accessed. On this way a smart card file system has a typical tree structure, as described in the following figure:

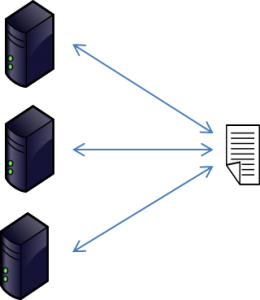

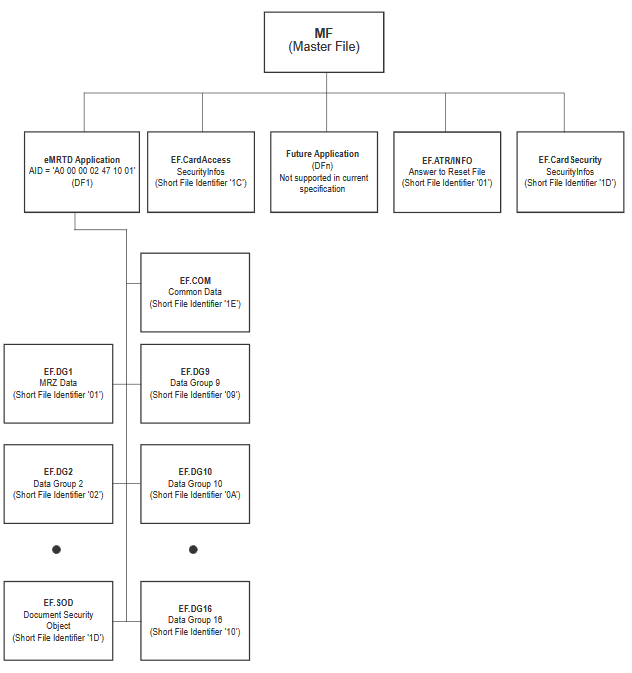

Additionally, ICAO Doc 9303 describes in part 10 another sample file strucure with focus on eMRTD. Also in this case the master file is the root of the file system and all aditional files or applications are nodes or leafs of the tree For instance, following figure visuals a sample eMRTD file system including a master file:

Likewise, the eMRTD Application in the figure is the Application DF mentioned above in the ISO 7816 file structure. Both EF and DF can be selected with the APDU called SELECT. Compared to an EF, that is selected with the command ’00 A4 02 0C <Lc> <FID>’, an application is selected by the following APDU: ’00 A4 04 0C <Lc> <AID>’ (where <Lc> length of command, <FID> means File ID and <AID> means Application ID. The difference is in parameter P1 of the APDU: 04 indicates a ‘Select by DF name’ and 02 indicates a ‘Select EF under the current DF’.

Characteristics of a Master File

You can address the master file in almost the same manner as a EF or DF. A master file has s special file identifier, which is reserved only for master files: ‘3F 00′. This FID can be used to select the MF. Therefore ISO 7816 specifies the APDU ’00 A4 00 0C 02 3F 00′ to select the MF. On the other hand ICAO Doc 9303 part 10 (clause 3.9.1.1) specifies an alternative APDU to select MF: ’00 A4 00 0C’. In short: both commands are valid and select the MF. Beyond that, Doc 9303 recommends in the same clause in a note not to use the SELECT MF command. Why might this APDU cause problems? You can find the answer in ISO 7816 (clause 11.1.1): “If the current DF is neither a descendant of, nor identical to the previously selected DF, then the security status specific to the previously selected DF shall be revoked”. To sum up: changing the directory during Secure Messaging results in closing the secure channel and losing the secure session.